Generative models and Deep Reinforcement Learning for Geospatial Computer Vision

Presagis is a Montreal-based software company that supplies the top 100 defense and aeronautic companies in the world with simulation and graphics software. Over the last decade, Presagis has built a strong reputation in helping create the complexity of the real world in a virtual one. Their deep understanding of the defense and aeronautic industries combined with expertise in synthetic environments, simulation & visualization, human-machine interfaces, and sensors positions them to meet today’s goals and prepare for tomorrow’s challenges. Today, Presagis is heavily investing into the research and innovation of virtual reality, artificial intelligence, and big data analysis. By leveraging their experience and recognizing emerging trends, their pioneering team of experts, former military personnel, and programmers are challenging the status quo and building tomorrow’s technology — today.

The Immersive and Creative Technologies lab (ICT lab) was established in late 2011 as a premier research lab, committed to fostering academic excellence, groundbreaking research, and innovative solutions within the field of Computer Science. Our talented team of researchers concentrate on specialized areas such as computer vision, computer graphics, virtual/augmented reality, and creative technologies, while exploring their applications across a diverse array of disciplines. At the ICT Lab, we strive to achieve ambitious long-term objectives that are centered around the development of highly realistic virtual environments. Our primary objectives include (a) creating virtual worlds that are virtually indistinguishable from the real-world locations they represent, and (b) employing these sophisticated digital twins to produce a wide range of impactful visualizations for various applications. Through our dedication to academic rigor, inventive research, and creative problem-solving, we aim to propel the boundaries of technological innovation and contribute to the advancement of human knowledge.

Harshitha Voleti - MSc

Saikumar Iyer - MSc

Shubham Rajeev Punekar - PhD

Ahmad Shabani - PhD

Amin Karimi - PhD

Bodhiswatta Chatterjee - PhD

Damian Bowness - MSc - [graduated]

Naghmeh Shafiee Roudbari - PhD - [graduated]

Jatin Katyal - MSc - [graduated]

Sacha Lepretre - Presagis Inc (CTO)

Charalambos Poullis - Concordia (PI)

Generative Modeling

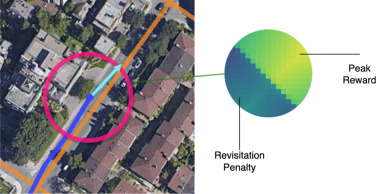

Deep Reinforcement Learning

Charalambos Poullis

Immersive and Creative Technologies Lab

Department of Computer Science and Software Engineering

Concordia University

1455 de Maisonneuve Blvd. West, ER 925,

Montréal, Québec,

Canada, H3G 1M8

Copyright © 2022 Immersive and Creative Technologies lab, Concordia University. All Right Reserved